From tracking macros and calories in Indian thalis to helping culinary enthusiasts understand the nuances of different types of biryanis, researchers at IIIT-H are using computer vision to conserve food culture and traditions.

If you’re someone who has been tracking your food intake via an app, you are already aware of the challenges it poses. Especially when it comes to traditional Indian meals. Understanding an Indian meal is far more complex than analysing a burger or a sandwich – and that challenge is now at the heart of a growing research effort that is using artificial intelligence (AI) to decode Indian food, cooking and culture.

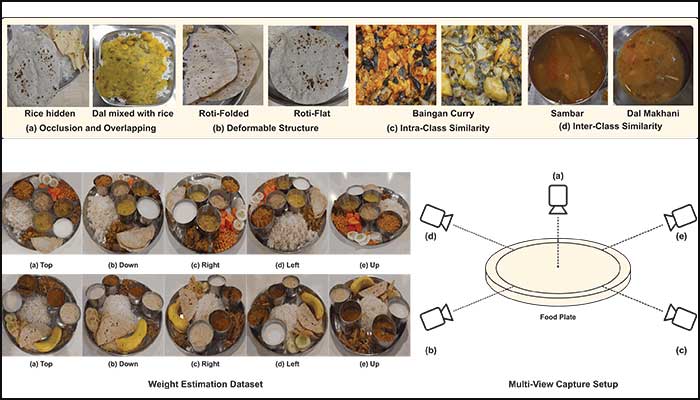

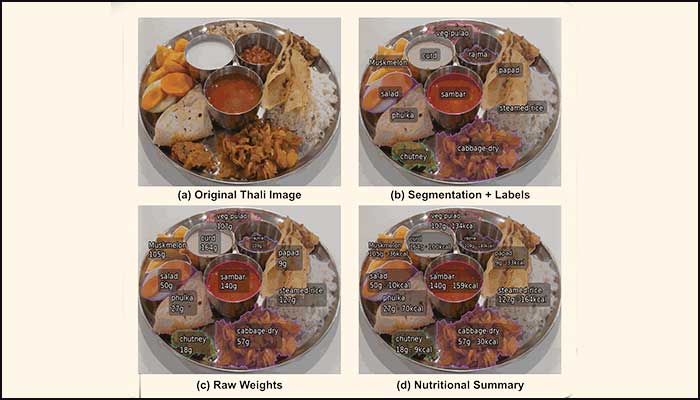

Researchers from the Center for Visual Information Technology, IIIT-H are developing tools to understand a typical Indian thali – a plate that often contains multiple dishes such as rice, dal, roti, chutney, curd – with mixed textures and overlapping ingredients. As Prof. CV Jawahar, who has been leading the project asks, “If you are given a full plate of typical Indian food that not only has multiple dishes, but mixed ones like rice topped with dal, a roti hidden under a papad.… how do you understand what is there on a plate and eventually its nutritional value?”

This problem is critical because most existing food-tracking apps are designed for discrete and standardized Western meals. “What we are talking about is how a typical Indian meal can be understood and characterised,” he says.

The research which resulted in a paper titled,”What is there in an Indian thali”, authored by Yash Arora and Aditya Arun under the guidance of Prof. Jawahar has been presented at the 16th Indian Conference on Computer Vision, Graphics and Image Processing (ICVGIP 2025).

Why AI Struggles With Traditional Plates

Existing food-scanner and nutrition systems assume a fixed menu and stable recipes. Indian food breaks both assumptions. “In our everyday meals, sambar and dal can look alike one day, maybe due to the same amount of turmeric added to both. Or one day, you can have plain yellow dal, and the next it may be green due to the addition of palak,” notes Yash. Additionally, in cafeterias, menus can change overnight. Training a traditional supervised model again and again is simply impractical.

IIIT-H’s Zero Shot Solution

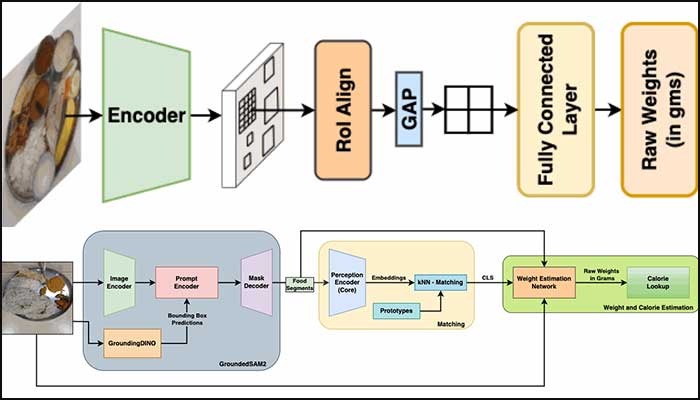

Instead of retraining models, the team built a zero-shot system – one that can recognize new food items without starting over. According to Yash, the system first identifies food regions without knowing exactly what the food item is. Then, instead of rigid classification, the system uses retrieval-based prototype matching. This approach makes the system scalable, flexible, and realistic for places like cafeterias and hospital messes.

From A Hospital Need To Everyday Apps

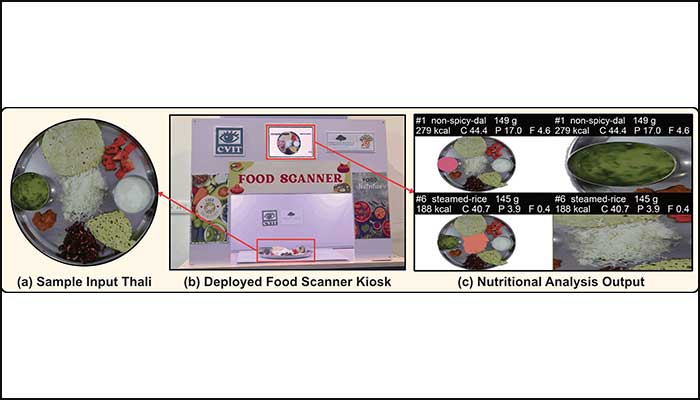

The project began with a real-world healthcare need. The request was particularly around monitoring nutrition for pregnant women, and that helped in shaping this work. Researchers at IIIT-H have already built a working prototype that can analyse an image of an Indian meal and estimate its contents. The current setup works with an overhead camera at a kiosk. But the long-term vision goes much further. “We want to extend it to an app-based system,” states Yash. To prepare for this, the team has captured data from multiple angles, allowing food to be scanned from a phone camera – not just a fixed setup. The team however acknowledges that there are many practical issues still unsolved.

One major challenge is food mixing – rice and dal, gravies and vegetables – which makes calorie and nutrition estimation difficult. “We still have not addressed some of the fundamental issues,” notes Prof. Jawahar, and this includes understanding water content, texture and proportions. The ultimate goal is a system that combines food recognition, weight estimation, and nutritional breakdown – accurate enough for everyday use.

Mapping India’s Food Culture

Beyond individual plates, IIIT-H researchers are also building an “Indian food map” to capture how food varies across regions. Since food is deeply tied to culture, the idea is to visualise differences in ingredients, tastes and cooking styles across the country. This includes mapping raw materials, spice preferences and regional flavours. “Different parts of the country have different types of food, or sometimes similar dishes but different ways of cooking them,” remarks Prof. Jawahar.

Why Biryani Became the Test Case

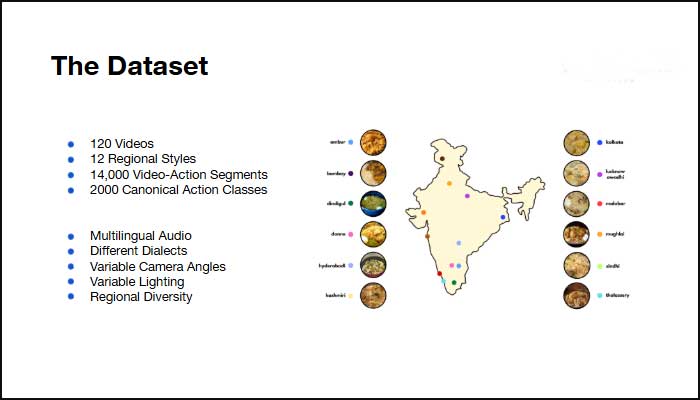

To manage the scale of the problem, researchers narrowed their focus to one iconic dish: the biryani. “We thought that in order to build a small prototype, why not focus on India’s most favourite and most ordered dish,” explains Farzana, one of the authors of the paper titled, “How Does India Cook Biryani?”, adding that even this required limiting the number of biryani styles studied.

The focus is not just on ingredients, but on YouTube cooking videos, which have replaced traditional cookbooks for many people. By analysing these videos, the team hopes to understand cooking sequences, dependencies and unspoken expertise. “The diversity of cooking each style of biryani which varies with the choice of ingredients, cooking utensils, sequence of preparing steps has been documented through a plethora of online cooking videos,” explains Farzana, adding, “But if I wanted to compare the Hyderabadi style of cooking vs. the Awadhi style, or if I wanted to know which recipe uses more whole spices, it’s not easy to get answers by just viewing the videos.” “If you are a naïve cook, you will struggle,” agrees Prof. Jawahar, pointing out that many steps in the videos are implied rather than explained.

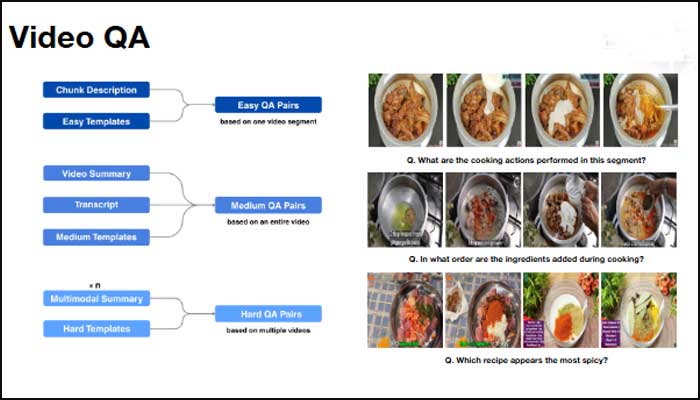

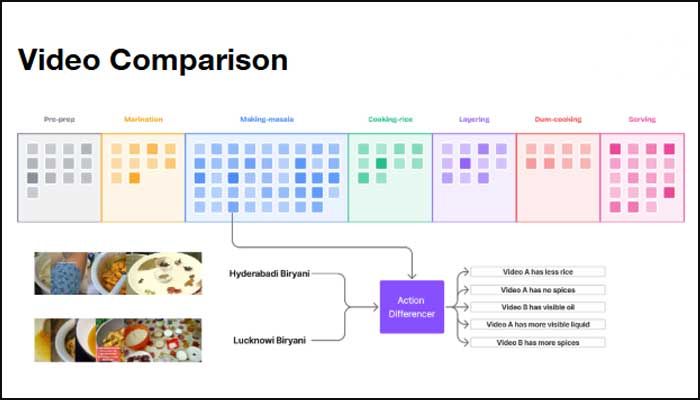

One of the most striking outcomes of the work is a system that can directly compare two recipes, for instance significant variations in the making of masalas for the Hyderabadi vs. the Awadhi biryani styles. The team also created a question-and-answer benchmark, ranging from simple to complex reasoning across multiple videos. This helps in answering not only simple questions one might have, such as, what ingredient was added before the onions but also more complex, comparative ones such as which style uses less oil.

The Future: AI That Cooks With You

The long-term vision of the researchers goes well beyond analysing food. They imagine AI systems that actively assist people while they cook. “Today, you play the video, pause it, and carry out some of the cooking steps,” remarks Prof. Jawahar. “In the future, an AI assistant could watch you cook and guide you in real time, almost like somebody standing next to you and keeping silent when it (commentary) is not required.”

There are even more ambitious possibilities. “People talk about robotic cooking,” reports the professor, describing a future where AI could replicate the intuitive style of home cooking – “just like your grandmother cooking for you.”

Bigger Picture; Beyond Cuisine

This work also addresses a broader challenge in artificial intelligence: understanding instructional videos. Cooking videos are just one example of skill-based content that includes dance, crafts and vocational training. According to Prof. Jawahar, “Understanding instructional videos is a very important area that is emerging, with applications that range from education and skill development to employment generation”.

While research in exploring cuisines and culture is still evolving, the ambition is clear. “Some work is done, some work is in the plans,” reflects Prof. Jawahar. “But there is a lot more to do.”

Sarita Chebbi is a compulsive early riser. Devourer of all news. Kettlebell enthusiast. Nit-picker of the written word especially when it’s not her own.

Next post