December 2022

Faculty and students presented the following papers at the Empirical Methods in Natural Language Processing conference (EMNLP-2022) at Abu Dhabi from 7 – 11 December. The conference was held in hybrid mode, both online and offline. EMNLP is a leading conference in the area of natural language processing and artificial intelligence.

- Ananya Mukherjee and Manish Shrivastava presented a poster on Unsupervised Embedding-based Metric for MT Evaluation with Improved Human Correlation at the Seventh Conference on Machine Translation at EMNLP-2022. Research work as explained by the authors:

In this paper, we describe our submission to the WMT22 metrics shared task. Our metric focuses on computing contextual and syntactic equivalences along with lexical, morphological, and semantic similarity. The intent is to capture the fluency and context of the MT outputs along with their adequacy. Fluency is captured using syntactic similarity and context is captured using sentence similarity leveraging sentence embeddings. The final sentence translation score is the weighted combination of three similarity scores: a) Syntactic Similarity b) Lexical, Morphological and Semantic Similarity, and c) Contextual Similarity. This paper outlines two improved versions of MEE i.e., MEE2 and MEE4. Additionally, we report our experiments on language pairs of en-de, en-ru and zh-en from WMT17-19 testset and further depict the correlation with human assessments.

- Ananya Mukherjee and Manish Shrivastava presented a poster on REUSE: REference-free UnSupervised quality Estimation metric at the Seventh Conference on Machine Translation at EMNLP-2022). Research work as explained by the authors:

This paper describes our submission to the WMT2022 shared metrics task. Our unsupervised metric estimates the translation quality at chunk-level and sentence-level. Source and target sentence chunks are retrieved by using a multi-lingual chunker. The chunk-level similarity is computed by leveraging BERT contextual word embeddings and sentence similarity scores are calculated by leveraging sentence embeddings of Language-Agnostic BERT models. The final quality estimation score is obtained by mean pooling the chunk-level and sentence-level similarity scores. This paper outlines our experiments and also reports the correlation with human judgements for en-de, en-ru and zh-en language pairs of WMT17, WMT18 and WMT19 test sets.

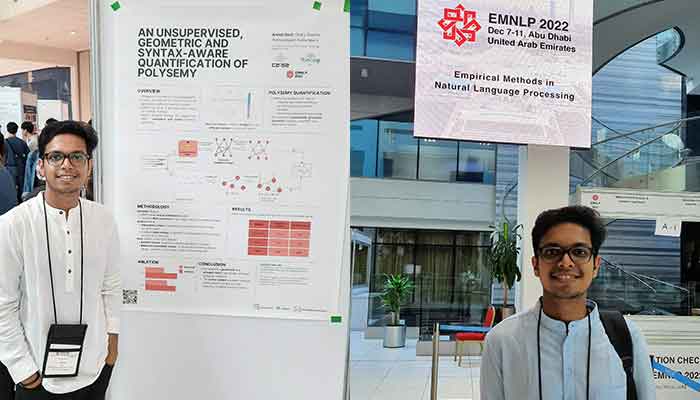

- Prof. Ponnurangam Kumaraguru; Anmol Goel, a Masters student working with Prof. Ponnurangam Kumaraguru and Dr. Charu Sharma presented a paper on An Unsupervised, Geometric and Syntax-aware Quantification of Polysemy.

Research work as explained by the authors:

Polysemy is the phenomenon where a single word form possesses two or more related senses. It is an extremely ubiquitous part of natural language and analyzing it has sparked rich discussions in the linguistics, psychology and philosophy communities alike. With scarce attention paid to polysemy in computational linguistics, and even scarcer attention toward quantifying polysemy, in this paper, we propose a novel, unsupervised framework to compute and estimate polysemy scores for words in multiple languages. We infuse our proposed quantification with syntactic knowledge in the form of dependency structures. This informs the final polysemy scores of the lexicon motivated by recent linguistic findings that suggest there is an implicit relation between syntax and ambiguity/polysemy. We adopt a graph based approach by computing the discrete Ollivier Ricci curvature on a graph of the contextual nearest neighbors. We test our framework on curated datasets controlling for different sense distributions of words in 3 typologically diverse languages – English, French and Spanish. The effectiveness of our framework is demonstrated by significant correlations of our quantification with expert human annotated language resources like WordNet. We observe a 0.26 point increase in the correlation coefficient as compared to previous quantification studies in English. Our research leverages contextual language models and syntactic structures to empirically support the widely held theoretical linguistic notion that syntax is intricately linked to ambiguity/polysemy. We will release the code for the paper on acceptance.

Anmol Goel was awarded a $1500 USD travel grant to attend the conference and present their paper in Abu Dhabi, UAE.

Conference page: https://2022.emnlp.org/