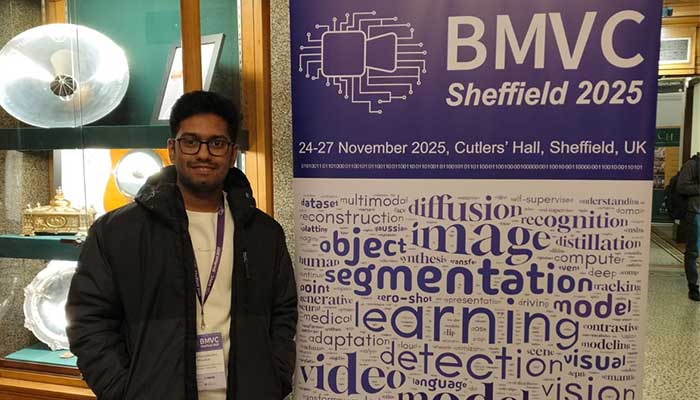

Kunal Kamalkishor Bhosikar, a dual-degree UG5 working under the supervision of Prof. Charu Sharma presented a paper on MOGRAS: Human Motion with Grasping in 3D Scenes at the 36th British Machine Vision Conference held in Sheffield, United Kingdom from 24 to 27 November. The paper was accepted in this conference in the workshop on From Human Understanding to Scene Modeling.

Here is the summary of the paper as explained by the authors Kunal Bhosikar, Siddharth Katageri, Vivek Madhavaram, Kai Han and Charu Sharma:

Generating realistic full-body motion interacting with objects is critical for applications in robotics, virtual reality, and human-computer interaction. While existing methods can generate full-body motion within 3D scenes, they often lack the fidelity for fine-grained tasks like object grasping. Conversely, methods that generate precise grasping motions typically ignore the surrounding 3D scene. This gap, generating full-body grasping motions that are physically plausible within a 3D scene, remains a significant challenge. To address this, we introduce MOGRAS (Human MOtion with GRAsping in 3D Scenes), a large-scale dataset that bridges this gap. MOGRAS provides pre-grasping full-body walking motions and final grasping poses within richly annotated 3D indoor scenes. We leverage MOGRAS to benchmark existing full-body grasping methods and demonstrate their limitations in scene-aware generation. Furthermore, we propose a simple yet effective method to adapt existing approaches to work seamlessly within 3D scenes. Through extensive quantitative and qualitative experiments, we validate the effectiveness of our dataset and highlight the significant improvements our proposed method achieves, paving the way for more realistic human-scene interactions.

Full paper: https://bmva-archive.org.uk/bmvc/2025/assets/workshops/SHUM/Paper_12/paper.pdf

December 2025