At the recently concluded International Conference on Intelligent Robots and Systems (IROS 2024) that was held in Abu Dhabi, IIITH’s Robotics Research Centre made a splash with a presentation of 6 research papers. Here’s a brief roundup of some of that cutting-edge work.

Since 1988, IROS, one of the largest and most important robotics research conference in the world, has been providing a platform for the international robotics community to exchange knowledge and ideas about the latest advances in intelligent robots and smart machines. With sustainable IT assuming critical importance in the recent years, not surprisingly, the theme of IROS 2024 was “Robotics for Sustainable Development“ with a focus on highlighting the role of robotics in achieving sustainability goals. The conference aimed to spotlight emerging researchers and practitioners, showcasing their contributions to sustainable development through plenary talks, workshops, technical sessions, competitions and interactive forums.

Robotic Grasping And Manipulation

In the research paper titled, “Constrained 6-DoF Grasp Generation on Complex Shapes for Improved Dual-Arm Manipulation”, primary authors Md Faizal Karim, Gaurav Singh and Sanket Kalwar have demonstrated a novel method of dual-arm grasping for robotic manipulation. According to them, grasping and manipulating large, delicate or irregularly shaped objects is challenging enough for robots and this becomes even more so in the case of dual-arm systems. “Imagine a robot carefully lifting a delicate vase or two robotic arms working together to assemble a piece of furniture. These tasks require a level of precision, stability, and coordination that existing grasping methods simply can’t achieve,” says Faizal, elaborating that this is because most existing methods generate grasp points all over the object without considering where exactly the robot ought to focus its efforts on.

Tough Grip

The team used a method known as Constrained Grasp Diffusion Fields (CGDF) which instead of trying to generate grasp points across the whole object, can focus its efforts on target areas. Not only can CGDF efficiently and accurately handle objects – even if they are large, fragile, or unusually shaped – but also in lieu of relying on vast, customised part annotated datasets to achieve targeted grasps, it uses a refined shape representation to focus its grasping efforts.

The Clutch Tests

To evaluate CGDF, the team compared it with two other grasp-generation methods. They tested each grasp by: 1) checking its stability and ensuring it doesn’t collide with the object, 2) having the robot try to lift the object in a simulated environment—if the object lifted to a certain height without falling, the grasp was successful; if it fell, the grasp failed, and 3) verifying that the grasps are close to the target area. CGDF outperformed the other methods in all these tests, achieving higher success rates and better stability, in terms of securely handling without either slipping or falling.

Understanding and Navigating from Commands

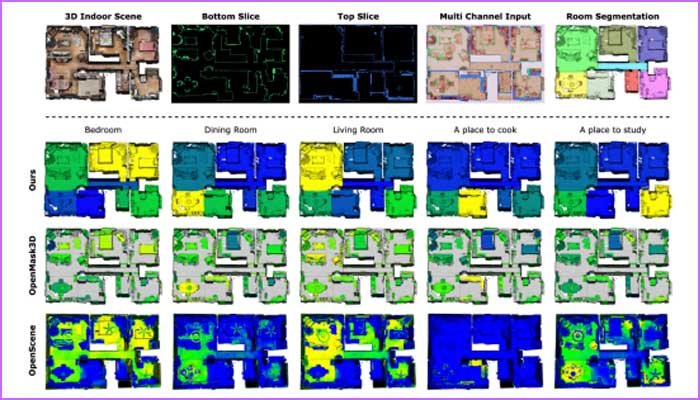

In the paper titled, “QueST Maps: Queryable Semantic Topological Maps For 3D Scene Understanding”, researchers Yash Mehan, Rohit Jayanti, Kumaraditya Gupta and others demonstrated a novel way that can enable robots to take instructions in a natural language, combine it with their own understanding of the ‘scene’ and navigate themselves to the desired location. “In order to understand a complex instruction such as – ‘Go through the corridor on the right and fetch me the kettle from the bedroom opposite the bookshelf’, the robot needs to build an understanding at two levels,” explains Yash. “The first is the structural organization which is the breaking down of the scene into different rooms and the comprehension of the topology or the inter- connectivity between the rooms and the entire scene, and the second is the semantic understanding – identifying the objects in the room and thereby deducing the kind of room it is,” he says.

Unique Factors

What makes this research unique is that it explicitly segments the input scene into different topological regions such as “bedroom”, “dining room” and so on. This way, a natural language prompt for a specified room will elicit the required room output. Additionally their approach allows them to segment transition areas such as doorways and arches thereby adding another level of complexity. The team reports that unlike existing methods that demonstrate results on structured and clean scenes from synthetic data, they have shown how it works in the real world by displaying results on a fairly challenging real-world dataset. By using a transformer encoder trained using a contrastive loss function, their approach enables one to query a scene with a simple “place to sleep” or “bedroom” and obtain remarkably accurate results.

The researchers are upbeat about extending their work to segment open-plan environments (where there are no clear demarcations of areas) as well as multi-level spaces. “We’ll be tackling these challenges in our upcoming work,” they state.

Robot Manipulation Via Visual Servoing

Robotic researchers Pranjali Pathare, Gunjan Gupta and others worked on enhancing existing visual servoing algorithms in their research paper titled,”Imagine2Servo: Intelligent Visual Servoing with Diffusion-Driven Goal Generation for Robotic Tasks”. Visual servoing refers to controlling a robot’s motion with the help of visual feedback obtained from vision sensors. In autonomous systems, this is very important because it helps the systems “see” the environment and accordingly make adjustments in real-time – a necessity for tasks requiring precise manipulation and navigation. “But one of the major challenges in implementing visual servoing controllers is the requirement of a predefined goal image which limits its use in different applications,” remarks Pranjali. The team instead proposed task-based sub-goal images which can be used by the controller to achieve the final and desired outcome.

They trained a conditional, diffusion model to generate goal images based on current observations. By doing so, they equipped the servoing system with the ability to automatically determine and visualise the target images it needs to reach depending on the task at hand. When tested in real-world scenarios, this system was found to be highly effective in handling long-range navigation and complex manipulation without needing predefined goal images.

Differential Planning For Vision Language

Mapping, Localization, and Planning form the key components in the Autonomous Navigation Stack. While both Modular Pipeline and End-to-End architectures have been the traditional driving paradigms, the integration of language modality is slowly becoming a de facto approach to enhance the explainability of Autonomous Driving systems. A natural extension of these systems in the vision-language context is their ability to follow navigation instructions given in natural language – for example, “Take a right turn and stop near the food stall.” The primary objective is to ensure reliable collision-free planning.

Traditionally, prediction and perception components in current vision language action models are often tuned with their own objectives, rather than the overall navigation goal. In such a pipeline, the planning module heavily depends on the perception abilities of these models, making them vulnerable to prediction errors. Thus, end-to-end training with downstream planning tasks becomes crucial, ensuring feasibility even with arbitrary predictions from upstream perception and prediction components. To achieve this capability, a team of researchers from IIITH’s Robotics Research Centre comprising of Pranjal Paul, Anant Garg and Tushar Choudhary under the guidance of Prof. Madhava Krishna, Head of the Robotics Research Centre (RRC), IIITH, developed a lightweight vision-language model that combines visual scene understanding with natural language processing.

Distinguishing Feature

The model that has been explained at length in their paper titled, “LeGo-Drive: Language-enhanced Goal-oriented Closed Loop End-to-End Autonomous Driving” processes the vehicle’s perspective view alongside encoded language commands to predict goal locations in one-shot. “However, these predictions can sometimes conflict with real-world constraints. For example, when instructed to “park behind the red car,” the system might suggest a location in a non-road area or overlapping with the red car itself. To overcome this challenge, we augment the perception module with a custom planner within a neural network framework,” explains Pranjal, terming the end-to-end training approach as the key sauce of their work. This differentiable planner which enables gradient flow throughout the entire architecture during training eventually improves both prediction accuracy and the planning quality.

Common Theme

As per Prof. Madhava Krishna, the SOTA (state-of-the-art) research carried out at the RRC at IIITH while diverse has an underlying unifying thread. “Much of these research works translates to improving robotic performance in the personal and assistive robotic space be it indoor autonomous robots, self driving for on road conditions or indoor manipulation. The eventual aim is to enhance and introduce new functionalities in robotic systems that make them closer to deployment for such applications. Leveraging the recent growth in Foundational Models in Computer Vision and apt integration with classical robotic and learning algorithms make this possible,” he says.

Sarita Chebbi is a compulsive early riser. Devourer of all news. Kettlebell enthusiast. Nit-picker of the written word especially when it’s not her own.

Next post