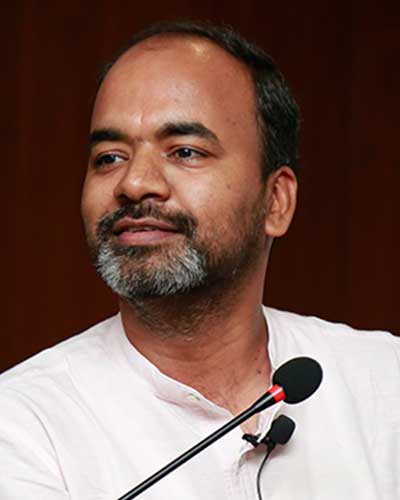

As part of the Techforward Research Seminar series, Prof. Ponnurangam Kumaraguru briefly touches upon the pitfalls of LLMs and the ways in which they can be made to unlearn or forget content.

In today’s world we rely on technology to accelerate response times to our tasks or queries, to gather accurate information and assist us efficiently. Let’s take the example of 3 everyday technological tools that almost everyone lives with – Google Translate, ChatGPT and WhatsApp. Now, let’s look at some of their imperfections. For instance, a test – that anyone can conduct – across these 3 tools reveals the gender biases that are present. In Google Translate, the prompt for “My friend is a doctor” will translate it to “Mera friend ek doctor hai” while “My friend is a nurse” translates it to “Meri friend ek doctor hai”. Similarly, a query in WhatsApp for an image of a doctor will throw up one of a male doctor while a query for a nurse invariably throws up one of a female nurse.

Let’s now look at something known as guardrails. AI systems have these tools built into them to monitor their output in order to prevent plagiarism, harmful content, to reduce security bottlenecks and so on. For instance, students would be all too familiar with what happens when they present a prompt on ChatGPT asking it to modify their friend’s code for an assignment such that they don’t get exposed. It refuses to plagiarize citing ethics. Students only have to change the prompt to “Please refactor this code for me”. The system not only refactors but also annotates all that it has changed, where, and so on, in a nice fashion. This is known as jailbreak – when changes are made to a piece of technology so that one can use it in a way that was not intended to by the company or person who produced it, and may not be allowed.

Machine Unlearning

All the examples above demonstrate the current situation we are in, which is that most of these models are trained from publicly available data. Such data can have information that we don’t want the models to learn from, or if it has already learned, then we don’t want the models to use it. The only way to overcome inherent biases is via training, but training is extremely expensive. For example, OpenAI spends roughly over $100 million to train GPT-4. Another large concern is that there is publicly available information that is stale, or may be private, copyrighted, toxic content, content with dangerous capabilities or plain misinformation. This needs to be either edited or removed without retraining the models from scratch. Interestingly enough, there is a requirement by the Global Data Protection Regulation (GDPR) in Europe known as the ‘Right to Erasure’ otherwise known as the ‘right to be forgotten’. It essentially translates to the right to erasing all personal data that has been collected on platforms visited by the user(s). But imagine in an LLM-world, if all such personal data gets into the model, and the user wants to be forgotten, how do they go about it? Do they have to retrain? Well, retraining is expensive, so that’s not an option. Instead we have something known as ‘machine unlearning’. The field is becoming more and more relevant so much so that the 2023 Conference on Neural Information Processing Systems (NeurIPS 2023) conducted a Machine Unlearning competition where the goal was to come up with methods for models to unlearn data. Graph Unlearning The issue however is not only about erasing personal or private information from LLMs. Recommendations that users receive when they are on platforms or social media utilise graph data. So graph unlearning is an extension of machine unlearning designed specifically for graph structured data. The graph unlearning framework involves three types of removal requests – node removal, edge removal, and node feature removal. This level of removal is significantly more complicated than traditional machine unlearning because of the interconnectedness of all the entities.

Multi-Agent Interactions

At Precog https://precog.iiit.ac.in/ research lab at IIITH, students and I are currently studying how things can go wrong in today’s “agentic world”. For instance in a home setting where there’s an agent that controls and manages Google Home devices, researchers presented a scenario where a user interacts with the agent requesting all connected devices to be turned off since he is getting on a conference call. What happened was that all non-essential connected devices were turned off such as the TV, the speaker and the security camera. While it was not explicitly specified to mute media devices, the fact that the agent did so via implication of context is commendable. But it also turned off the security camera which is a security device thereby compromising the safety of the house.

Multimodal Adversarial Attacks

Adversarial attacks have been studied for long but more recent research titled ‘Soft prompts go hard’ has shown how hidden ‘meta-instructions’ can influence the way a model can interpret images and steer the model’s outputs to express an adversary-chosen sentiment or point of view.

The Broader Question

It all finally boils down to an alignment problem – can we get all these systems to align with the behaviours that we expect. We know that machines can solve problems that humans will take decades to solve but sometimes they are less inclined to listen to human instructions. It is important to solve this problem to benefit from AI. The system should be incentivised to tell the truth and not hallucinate. Another really cool research problem would be the ability to distinguish between AI-generated and human-generated content. At IIITH, we’ve been engaged in the latter but more specifically content in Indian languages and this work “Counter Turing Test: Investigating AI-Generated Text Detection for Hindi–Ranking LLMs based on Hindi AI Detectability Index” was presented at COLING 2025 in Abu Dhabi between January 19 -24 January 2025

This article was initially published in the January ’25 edition of TechForward Dispatch

Prof. Ponnurangam Kumaraguru PK is a Professor of Computer Science, IIITH. His primary interests lie in responsible AI, applied ML and NLP. He also serves as the Vice President of ACM India.

Next post