Prof S. Bapi Raju lists out IIITH’s innovations in detection of sleep disorders through automatic classification of sleep stages, thereby providing an in-depth sleep analysis.

A nutritious diet and adequate exercise are cornerstones of good health. But good quality sleep is crucial too for overall health, impacting physical, cognitive, and emotional well-being. Quality sleep supports muscle growth, tissue repair, and immune function, while poor sleep is linked to higher risks of cardiovascular diseases, diabetes, and obesity. Cognitively, sleep aids memory consolidation, problem-solving, and decision-making, with deprivation impairing concentration, slowing reactions, and contributing to long-term cognitive decline and neurodegenerative diseases like Alzheimer’s. Emotionally, inadequate sleep increases stress, anxiety, and depression, exacerbating mental health issues and reducing resilience to daily stressors. For example, insomnia heightens anxiety and depression, creating a harmful cycle. Poor sleep also affects performance and safety, contributing to drowsy driving – a major cause of road accidents, as well as decreased productivity and increased errors in professional settings. Therefore, accurate sleep quality assessment is essential for diagnosing and managing sleep disorders like insomnia, sleep apnea, and narcolepsy, which can lead to chronic health issues if untreated. Advanced diagnostic tools are needed to efficiently and accurately monitor sleep quality, enabling timely and effective interventions and ultimately improving quality of life and health outcomes.

Role of AI in Diagnosing Sleep Disorders

Sleep is typically divided into several distinct stages that cycle throughout the night, each characterised by unique brain wave patterns and physiological activities. These stages are essential for various cognitive and biological functions, including memory consolidation, emotional regulation, and physical restoration. The sleep cycle broadly consists of non-rapid eye movement (NREM) sleep and rapid eye movement (REM) sleep. NREM sleep is further subdivided into three stages, namely, N1 (light sleep), N2 (deeper sleep), and N3 (deep or Slow-Wave Sleep (SWS)). Using the electrophysiological signals (EEG), different sleep stages such as awake, N1, N2, N3, and REM need to be identified either manually or through an algorithm. Sifting through typical 8-hour sleep lab data manually and scoring various stages is tedious and error-prone. Recent advancements in artificial intelligence (AI) offer promising new avenues to diagnose and manage sleep disorders. Deep learning (DL), a subset of AI, has shown exceptional potential in automating sleep stage classification, a task traditionally performed through labour-intensive manual scoring of polysomnography (PSG) or sleep study data (see Figure 1 for a typical sleep lab setup). DL models, particularly those employing supervised and unsupervised learning techniques can analyse large datasets to identify patterns and classify sleep stages with high precision.

Supervised and Unsupervised Learning Approaches

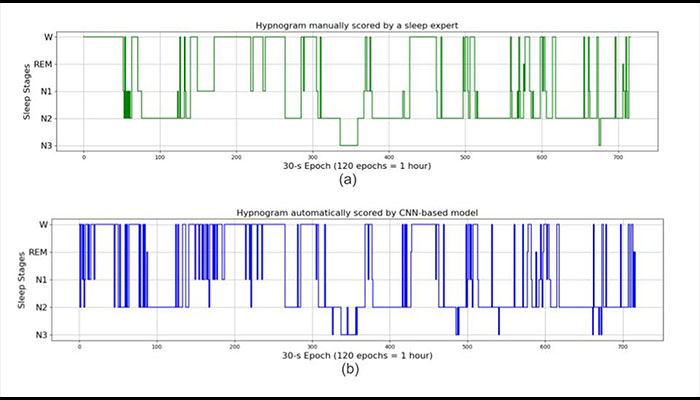

Supervised Learning involves training DL models on annotated datasets where the correct output is known. These models learn from labelled examples and can make accurate predictions on new, unseen data. For sleep stage classification, supervised models like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have been widely used. These models have demonstrated improvements in accuracy and efficiency over traditional methods, reducing the need for human intervention and enabling quicker, more reliable diagnoses (see Figure 2 for CNN-based classification results in the form of a hypnogram).

Unsupervised Learning does not rely on labelled data. Techniques such as clustering and anomaly detection find patterns in the data without predefined labels, making them particularly useful for exploring large datasets where manual annotation is impractical. Self-supervised learning, a form of unsupervised learning, has also been successfully applied to EEG data for sleep staging tasks.

Innovations in Sleep Stage Classification

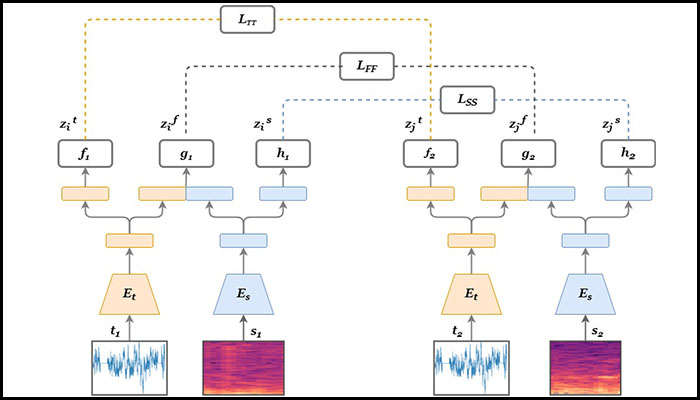

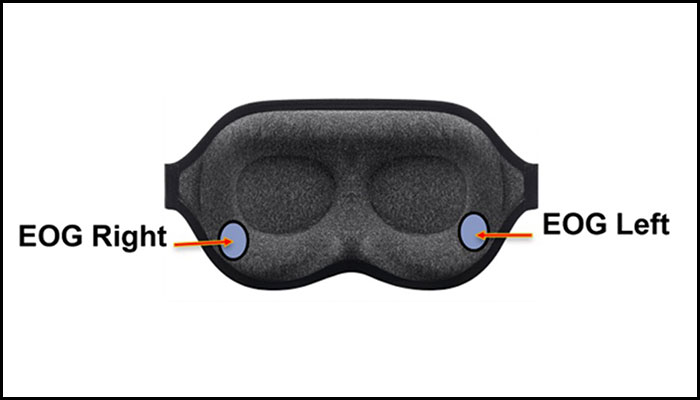

At IIITH, we have been working on both supervised as well as unsupervised learning algorithms for classifying sleep stages. mulEEG or multi-view self- supervised learning is a an unsupervised learning method that leverages complementary information from multiple views to enhance representation learning (see Figure 3). Our approach outperforms existing multi-view baselines and supervised methods in transfer learning for sleep-staging tasks. Additionally, it significantly enhances training efficiency with an 8x speed increase. We have also come up with an EOG-based wearable solution. EOG or electrooculography measures electrical activity of the eyes to track their movement and position. Current wearable solutions for sleep monitoring that utilise sensors for parameters like heart rate variability (HRV), respiratory rate, and movement, face challenges in accuracy and reliability compared to traditional PSG. These devices often struggle with consistent data quality across diverse populations and environments, hindering precise sleep stage classification necessary for diagnosing sleep disorders. To address these issues, our EOG and electrode-based mask combines non-intrusive monitoring with multi-channel data collection, providing real-time feedback. In addition to this, IIITH is collaborating with the NIMHANS Neurology Department to create an Indian SLEEP Stroke dataset (iSLEEPS hosted at IHub-Data, IIIT-H). It marks a significant advancement in sleep research particularly addressing the scarcity of annotated datasets in India. The dataset includes sleep data of 100 ischemic stroke patients, most of whom suffer from sleep disorders, featuring comprehensive polysomnography (PSG) recordings and detailed clinical annotations. By adhering to strict ethical guidelines and ensuring patient privacy through anonymization, the iSLEEPS provides a robust foundation for developing AI models tailored to the Indian population. This initiative facilitates global collaboration in sleep medicine, enabling researchers to develop more accurate diagnostic tools and therapeutic strategies, identify unique patterns and risk factors specific to the Indian population, and ultimately enhance the global understanding of sleep health.

Application and Future Scope

Our research demonstrates that EOG signals processed through the model can effectively classify sleep stages, presenting a viable alternative to traditional PSG signals. These promising results suggest that an EOG and electrode-based mask could be a practical solution for non-intrusive, home-based sleep monitoring (See Figure 4 for a possible design). Such a device would offer accurate real-time sleep stage classification essential for diagnosing and managing sleep disorders. This mask could be used in community health-care, clinical settings, and personalised health monitoring systems, providing high-quality, consistent data across diverse populations and environments. Future developments may include integrating additional physiological signals like heart rate and respiratory rate to further enhance diagnostic accuracy, paving the way for improved patient comfort and advancing wearable sleep technology.

References

[1] Vamsi Kumar, Likith Reddy, Shivam Kumar Sharma, Kamalakar Dadi, Chiranjeevi Yarra, Bapi Raju, Srijithesh Rajendran, mulEEG: A Multi-View Representation

Learning on EEG Signals, The Medical Image Computing and Computer Assisted

Intervention Society (MICCAI), 2022.

[2] S. Maiti, S. K. Sharma and R. S. Bapi, Enhancing Healthcare with EOG: A Novel Approach to Sleep Stage Classification, ICASSP 2024 – 2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Korea, Republic of, 2024, pp. 2305-2309, doi: 10.1109/ICASSP48485.2024.10446703.

This article was initially published in the July edition of TechForward Dispatch

Prof S Bapi Raju is a professor and head of the Cognitive Science Lab, IIIT Hyderabad. His research interests include neuroimaging methods to study the brain function, developing methods for characterizing structure-function relation and implications for developmental and neurodegenerative disorders. He is currently leading the Healthcare efforts in IHub-Data at IIITH. He is a Senior Member of IEEE. Website: https://bccl.iiit.ac.in/people.html

Next post