IIITH researchers create a music algorithm that curates and plays appropriate background music as you read books. This independent study project walked away with the prestigious award at the recently concluded annual conference of International Society for Music Information Retrieval.

Historically the use of music in factories, gyms or even at home where one has to perform mundane chores has shown to produce more efficient outcomes. Similarly a movie-watching experience is suitably more heightened with soundtracks, theme songs and original film scores. Not surprisingly, even silent movies used music via orchestras and in-house pianists to complement scenes – fast-paced to create tension, upbeat notes to evoke joy, gloomy tones to mark despair and so on. A team of IIITH researchers comprising BTech student Jaidev Shriram alongwith professors Makarand Tapaswi and Vinoo Alluri has attempted to create a similar immersive experience but this time for book reading.

Their patent-pending research has culminated in a study titled “Sonus Texere: Automated Dense Soundtrack Construction For Books Using Movie Adaptations” in which they showcase an automatic system that can retrieve and weave high-quality instrumental music for the entire length of a book. Speaking about the motivation behind the project, Jaidev says, “Typically when you read books, either you don’t listen to music at all or you have to carefully control the kind of music that’s playing in the background. Else, there’s an emotion mismatch. For instance, if there’s something sad in the book and there’s upbeat music playing, it can be off-putting. We wanted to see if we could get music composed by expert composers to automatically play in sync with the narrative and plot of a storyline”.

Finding Parallels

Thanks to high-quality music in movies tailored to the stories themselves, the group began by examining rich, narrative-driven movie soundtracks and the books they were originally based on. For this, the Harry Potter series seemed like an obvious choice due to its amazing movie adaptations. But instead of taking the book and then generating ambient music for it, they attempted the exact opposite. “We thought .. how about we now take the soundtrack from the movie, Harry Potter and The Philosopher’s Stone and retroactively fit it into the book?”, remarks Jaidev. To do so, they first broke down the book into chunks where each piece was homogenous in terms of either emotion, or plot. The same thing was done with the movie too: it was chunked based on individual scenes. And similarly with the soundtrack. “We categorised the music based on how homogenous it sounded in terms of emotion which is often captured by the tonal properties of music – major and minor keys for instance,” says Jaidev.

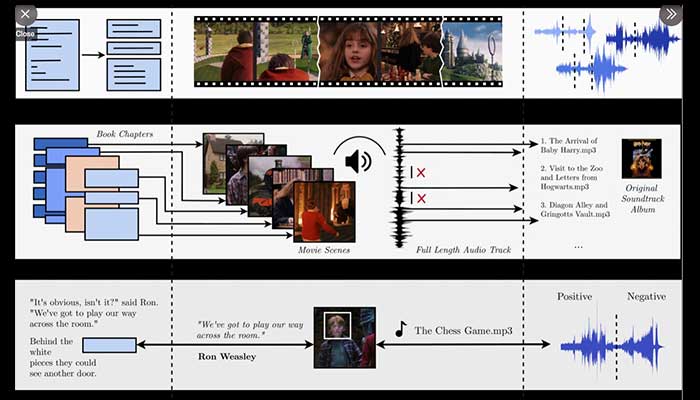

To align the book with its movie adaptation, the team resorted to 3 kinds of matching; One, dialogues from the movie were matched with those in the book. Besides that, they also did text to image-based retrieval matching using OpenAI’s CLIP model. “Essentially it means that if the text mentions a snake, we try to find the image of a snake in the movie in a potentially relevant segment and perform a match,” explains Jaidev. Finally, they matched movie segments with their corresponding music. However, no movie is 100% faithful to its book. And the Harry Potter adaptation was no different. With only about 60% of the book finding a direct match with its movie counterpart, the researchers filled in gaps with an emotion-based retrieval system. It means that if a particular segment of text evoked feelings of fear, then the system would automatically match it with potential musical segments that could accompany it. All these elements together have yielded an application that infinitely loops music as you scroll down segments of the book. “A key element is to ensure that we are in the same soundscape for the entire duration of book reading so that the reader indeed experiences the Pottersque world”, chimes in Prof. Vinoo.

“This work builds on some fun projects that I worked on as part of my PhD on story understanding,” states Prof. Makarand. In one of them, along with his collaborators, Prof. Makarand developed a way to automatically summarise and represent the storyline of a TV episode by visualising character interactions. In the other, he proposed an automatic method of aligning book chapters with video scenes using matching dialogs and character identities as cues. However, according to the professor, “The previous work provides a coarse alignment between book chapters and movie scenes. But here, we obtain finer matches by scoring narrative paragraphs in the chapter with movie frames using Open AI CLIP!”

Hitting The Right Notes

To validate their design, the team conducted a perceptual study on participants who had previously read the Harry Potter book (minus the music) and watched its reel version too. All of them reported that the soundtrack enhanced the reading experience itself making it more immersive. Some of them stated that it helped in visualising parts of the book, while others went on to attribute their ability to focus without distractions to the ambient music. “The generated soundtrack was complementary to the story and definitely enhanced the emotional aspects. For instance, in the final chapter, there’s a conflict between Harry and Voldemort. The music is really intense here. And just before this scene, the music is quite suspenseful. So, in a sense the music really nudges you towards the plot,” remarks Jaidev.

How This Algorithm Scores

One way to describe the retrieved music is to compare it to a tapestry.“The music crossfades or changes very slowly as you read along. We’ve titled the paper ‘Sonus Texere:..” where the word ‘Texere’ itself is Latin for ‘to weave’,” says Jaidev, explaining that they have used the concept of weaving together individual pieces of music to build a larger soundtrack. While this has been a great start for creating an auto soundtrack for books that have movie adaptations, the way forward would be to replicate it for books that don’t have movie counterparts. With audiobooks becoming more of a norm, an ambient soundtrack that could play in parallel is definitely something to look forward to.

ISMIR Conference 2022

For its sheer novelty in the field of music information research, the paper won the Brave New Idea Award at the 23rd International Society for Music Information Retrieval (ISMIR 2022) which was held in a hybrid format for the first time in India (in Bengaluru) between December 4-8. The Brave New Idea award is granted to cutting-edge research with promise for real-world applications. The Annual ISMIR conference is the world’s leading research forum on analysing, processing, searching, organising and accessing music-related data and its scope extends beyond Computer Science, Musicology, Cognitive Science, Library and Information Science and Electrical Engineering. It not only attracts the world’s leading researchers engaged in this multi-disciplinary field but sees participation from some of the biggest names in the industry like Spotify, Moises, Smule, Deezer, Adobe, and others.

“Winning the award is fantastic for two reasons. One, because it was an independent study done by an undergraduate student who was up against seasoned researchers and PhD scholars. Two, it took place at the prestigious ISMIR that was conducted in India for the first time in 23 years. So in that sense, a win on home ground makes it all the more special,” remarks Prof. Vinoo.

To learn more about the work and experience it, click here.

nice

dd says: