IIITH’s new game theoretic insights on fairness in AI-based resource allocations receives Best Paper Runner-Up Award at the Pacific Rim International Conference on Artificial Intelligence (PRICAI) 2022. Here’s a sneak peek into algorithmic fairness research that’s underway at the Machine Learning Lab.

Automated decision making processes that are derived from machine learning models often have biases inadvertently built into them. However, the good news is that AI is constantly evolving and there’s a growing section of data science research specifically dealing with correcting such discrimination. At IIITH’s Machine Learning Lab, Prof. Sujit Gujar and his researchers Shaily Mishra and Manisha Padala have been engaged in applying game theoretic principles to ensure fairness in algorithms, particularly resource allocation.

Modelling A Virus-Induced Constraint

When the Covid-19 pandemic brought about resource-constrained scenarios, the researchers found their inspiration in developing envy-free machine learning models. “We observed that most of the existing literature assumes there’s utility attached to an object only when it is obtained by a person (1-D or 1-dimensional situation). But that’s incorrect; one can derive utility from something even when someone else obtains the desired object,” says Prof. Sujit. Consider scarce resources such as hospital beds, infrastructure like oxygen cylinders and even vaccinations in 2020 and 2021. “In the case of vaccinations, we observed that even if we didn’t get the jab but our neighbours did, we still got positive utility from it because it meant we were protected from our neighbours,” explains Prof. Sujit. For other critical support like ventilators however, getting hold of one would provide positive utility to a patient due to an increase in chances of survival and negative utility in the event of non-procurement of one. These are known as externalities. Shaily Mishra who is pursuing a Master of Science in Machine Learning research discovered that defining fairness or an equitable distribution of resources in complex models was extremely challenging. She proposed a simple, yet a powerful valuation transformation whereby existing algorithms in a 1-D space could be leveraged to achieve fairness in the presence of externalities or in a 2-D setting. “There are many fairness notions studied in the literature for 1-D domains. However, some of them do not generalise to 2-D domains”, reasons Shaily.

New Trends In AI

This novel work resulted in a research paper titled, “Fair Allocation With Externalities.” It was presented at the Pacific Rim International Conference on Artificial Intelligence (PRICAI) 2022 where it won the Springer Best Paper Award 1st Runner Up. Expressing happiness at the recognition, Prof. Sujit remarks that typically in AI forums, research problems that are presented are applied in nature, but AI problems in game theory are very theoretical. “Some of the reviewers gave us a strong accept. They appreciated the fact that we have made a valuable contribution,” he emphasises.

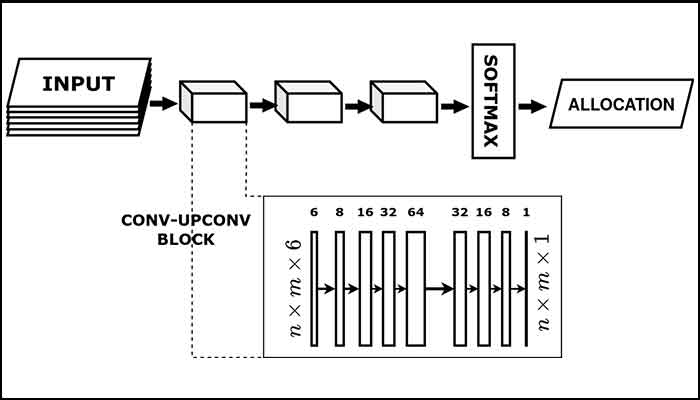

The conference also saw the team presenting another paper titled, “EEF1-NN: Efficient and EF1 Allocations Through Neural Networks”. In this paper, Shaily and Manisha trained a neural network that can achieve both fairness in allocation along with social welfare. “There’s a concept of envy-freeness that is aspirational and doesn’t actually exist. It means that regardless of the object(s) allocated among people, there is no envy. But that is not true. If I see you are given something that I don’t have, even if I don’t need it, I will experience envy. Hence, scientists relaxed this concept and proposed the idea of achieving envy-freeness up to one item (EF1), that is when one item is taken away from each of the agents”, explains Manisha. However according to Prof Sujit, even an efficient allocation that is EF1 is computationally challenging. Therefore, the team probed ways of using a Neural Network to find such allocations. Designing a neural network for such a complex task of learning an approximate algorithm presents primarily two challenges: One, how to represent the inputs since the number of agents or items to be allocated are different in every instance. Two, since the resource that is being allocated is indivisible, i.e., either I get it or I do not get it, it makes the integral allocations all the more challenging. “We have come up with novel architecture and training methods for the neural network to learn an algorithm”, says Shaily adding that they found their neural network could achieve a higher degree of social welfare all while ensuring EF1.

Fairness Amidst Privacy

A major concern in machine learning today deals with ensuring fairness without compromising on privacy of sensitive data such as gender, or race among others. “We have to come up with approaches where we train models using these attributes but the end user will never get to know what it was trained upon. This, in a way, guarantees some sort of privacy. But does that mean there’s a tradeoff between fairness (and privacy) on the one hand and accuracy on the other?,” questions Manisha, elaborating that her current research focuses on a 3-way trade-off between the three parameters. It resulted in a paper titled, “Building Ethical AI: Federated Learning Meets Fairness and Differential Privacy” co-authored with Ripple-IIITH Ph.D Fellow Sankarshan Damle which went on to receive the Best Paper Award at the Deployable AI conference in 2021. Prof Sujit says, “In the last couple of years, our group has focussed on making AI decisions fair and private with many research publications in top conferences globally”. Most of the codes developed by the group are available for the community to use on Github.

Next post