Even if doomsday predictions about AI and its impact are baseless, there needs to be efficient scrutiny and regulation of Artificial Intelligence systems in the Indian context, says Prof. PJ Narayanan.

AI has been dominating the headlines for all its triumphs as well as for the serious concerns and fears expressed by many including some of the best minds in AI. The global Technology Policy Council of Association for Computing Machinery (ACM) released a statement[1] in October 2022 on Principles for Responsible Algorithmic Systems, a broader class of systems that include AI systems. The statement was intended to serve as a guide for developers to be watchful against any unintentional bias or unfairness that could unfold in the creation of software. In April 2023, Future of Life Institute issued a call to “pause” training of generative AI systems for 6 months[2], signed by Turing Award winners Yohua Bengio, John Hopcroft, and many others. Simultaneously, 15 past Presidents of the AAAI (Association for the Advancement of AI) including Raj Reddy and Ed Feigenbaum exhorted the community to pay attention to AI safety, reliability, ethics, and fairness[3]. In May 2023, the Centre for Safety Society issued a terse call to mitigate the risk of extinction from AI[4], endorsed by an impressive list including Geoffrey Hinton, Bill Gates, Demis Hassbis, and others. Deep concern about AI is clear among many who know it well. What is behind this?

Focused and Better

AI systems are capable of exhibiting superhuman performance on specific or “narrow” tasks such as in the field of Chess where it has created a buzz, Go (a game several orders harder than chess) and also in Biochemistry for protein folding. Performance and utility of AI systems improve as the task is narrowed, making them valuable assistants to humans. Recognizing speech under different accents and conditions, translating from one language to another, identifying common objects in photographs, etc., are tasks that AI systems tackle today. It is claimed to exceed human performance on some tasks. However, their performance and utility degrade on more “general” or ill-defined tasks. They are weak in integrating inferences across situations.

Artificial General Intelligence (AGI) refers to intelligence that is not limited or narrow. Think of it as “common sense” about the world that humans possess but is absent in AI systems. Common sense will make a human run away on hearing an announcement of fire in the building while a robot which can accurately “recognize” the words of the announcement is unmoved! It doesn’t “understand” the meaning of the recognized speech or its larger implications in the situation!

There are no credible efforts towards building AGI yet; we don’t even know how it may be approached. Many experts believe AGI will never be achieved by a machine; others believe it could be in a far future.

LLM precursor to AGI?

A big moment for AI was the release of ChatGPT, a conversational chat box from OpenAI, in November 2022. ChatGPT is a generative AI tool that uses a Large Language Model (LLM) to generate text. LLMs are large artificial neural networks that ingest lots and lots of digital text to builds a statistical “model” of it. Several LLMs have been built by Google, Meta, Amazon, and others. ChatGPT’s stunning success in generating flawless paragraphs caught the attention of researchers, companies, governments, and lay people. Writing could now be outsourced to it, no student needs to learn it. Some experts even saw “sparks of AGI” in GPT-4[5]; AGI could emerge from a bigger LLM soon! Other experts refute this vociferously based on how LLMs work. At the basic level, all LLMs do is predict the most probable or relevant word to follow a given sequence of words, based on the learned statistical model. They are mere “stochastic parrots,” with no sense of meaning. They famously “hallucinate” facts, confidently (and wrongly) awarding Nobel prizes and conjuring credible citations to non-existent academic papers.

True, AGI will indeed be a big deal, if and when it arrives.

I believe current LLMs and their successors are not even close to AGI. Will AGI come someday? I reserve my judgement on it. However, the hype and panic about LLMs or AI leading directly to human extinction is baseless. The odds of the successors of the current tools “taking over the world soon” are close to (if not equal to) zero. Does that mean we can live happily, without worrying about the impact of AI? I see three possible types of dangers arising from AI in the future.

Superhuman AI: The danger of a super intelligent AI going beyond human control, converting humans to slaves. I don’t worry at all about this highly unlikely scenario.

Malicious Humans with Powerful AI: AI tools are relatively easy to build. Even the narrow AI tools can cause serious harm when combined with malicious intent. LLMs can generate believable untruths like fake news, create deep mental anguish leading to harming self or others, etc, manipulate opinions to affect democratic elections and more. AI tools work globally and take little cognizance of national boundaries or other barriers. They are like powerful guns that can fire at multiple locations with a single trigger! Individual malice can instantly impact people across the globe. Governments may approve or support such actions against “enemies”. Mankind has not found an effective defence against malicious human behaviour. Many well-meaning people have expressed concern about AI-powered “smart” weapons and have called for their ban. Unfortunately, bans aren’t effective in such situations. I don’t see any easy defence against malicious use of AI.

Highly Capable and Inscrutable AI: AI systems will continue to improve in capabilities and will be employed to assist humans in several daily tasks. These systems are constructed from real-world data using Machine Learning. Since they are trained on the real-world, they can perpetuate real-world shortcomings and may end up harming some sections more than others unintentionally, despite the best intentions of the creators. The algorithms may also introduce asymmetric behaviours that go against certain types or classes of inputs. Camera-based face recognition systems have been shown to be more accurate on fair-skinned males than on dark-skinned females. Unintended and unknown bias of this kind can be catastrophic when such systems are used to steer autonomous cars and diagnose medical conditions. Privacy is also a critical concern as AI systems constantly watch the public spaces, offices, and homes. Every person can be tracked always, violating our fundamental right to privacy.

Another significant worry is about who develops these technologies and how. Most recent advances took place in companies with huge computational data, and human resources. ChatGPT was developed by OpenAI which began as a non-profit and transformed into a for-profit entity. Other players in the AI game are Google, Meta, Microsoft, Apple, etc. Commercial spaces with no effective public oversight are the centres of action. Do they have the incentive to keep AI systems just?

Everything that affects humans significantly needs public oversight or regulation to ensure fair and just impact. While pharmaceutical drugs undergo years of trials before approval even for use under a doctor’s watch, AI systems with potential for serious, long-lasting negative impact on individuals. can be deployed at mass scale instantly with no oversight! How do we bring about effective regulation of AI that ensures safety without stifling creativity? What parameters about an AI system need to be watched carefully and how? There is very little understanding on these issues today.

Indian Regulatory System Urgent Need

Many a social media debate rages about AI leading to destruction. Amidst doomsday scenarios, solutions such as banning or pausing research and development in AI — as suggested by many — are neither practical nor effective. They may draw attention away from the serious issues posed by insufficient scrutiny of AI. We need to talk more about the unintentional harm AI may inflict on some or all of humanity. These are solvable, but concerted efforts are needed.

Awareness and discussions on these issues are largely absent in India. The adoption of AI systems is low here, and systems used are mostly made in the West. We need systematic evaluation of their efficacy and shortcomings in our situation. We need to establish mechanisms for necessary checks and balances before large scale deployment of AI systems. AI holds tremendous potential in different sectors such as public health, agriculture, transportation, governance, etc. As we exploit India’s advantages in them, we need more discussion and understanding to make AI systems responsible, fair, and just to our society. EU is on the verge of enacting an AI Act[6] that proposes regulations based on a stratification of potential risks. India needs a similar framework for itself, keeping in mind that regulations have been heavy-handed as well as lax in the past.

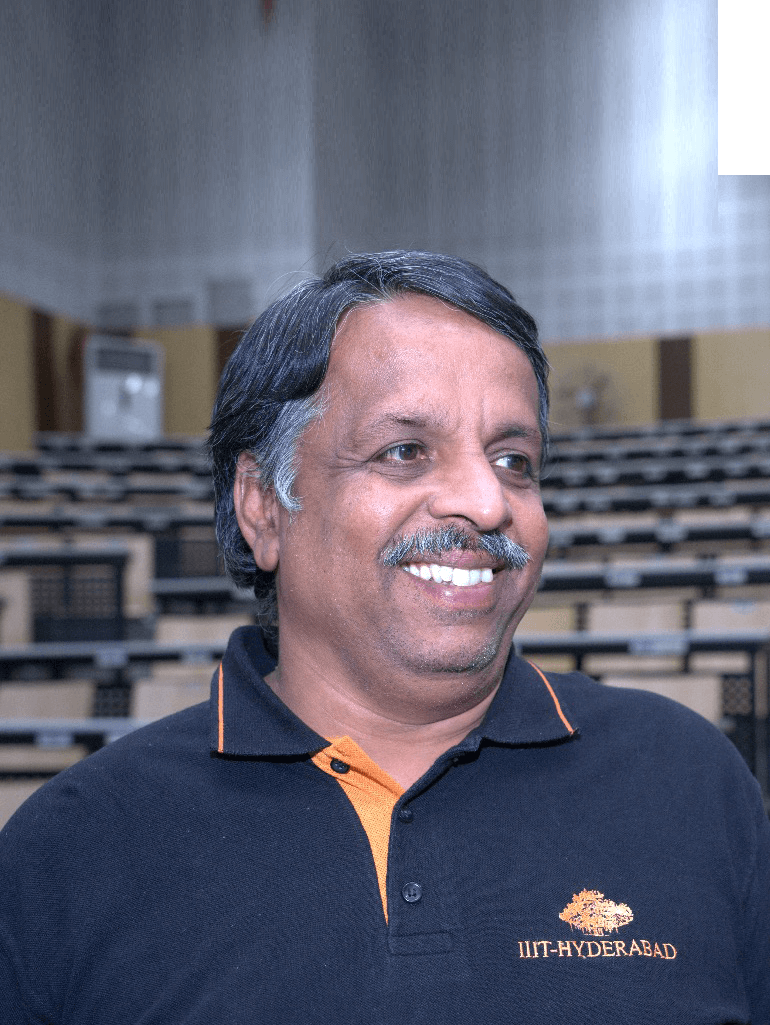

Prof P J Narayanan is a researcher in Computer Vision and the Professor and Director of IIIT Hyderabad. He was the President of ACM India earlier and currently serves on the global Technology Policy Council of ACM. Views expressed here are strictly personal.

An abridged version of his views was recently published by the The Hindu in its op-ed https://www.thehindu.com/opinion/lead/reflections-on-artificial-intelligence-as-friend-or-foe/article66973416.ece

Next post