IIITH is the only Indian institute in the global consortium of 13 universities and labs participating in Facebook AI’s ambitious Ego4D project that promises to enable experiences straight out of sci-fi.

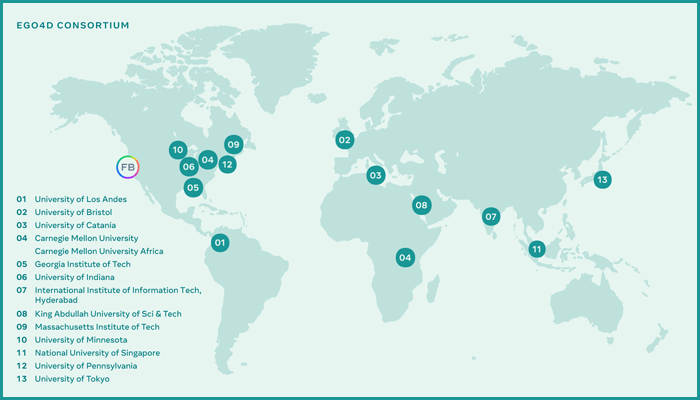

The “have-I-added-salt-or-not?’ memory lapse while cooking is all too familiar. But it seems that an AI-aid for such inattention will soon be at hand. Imagine a robot or an AI-assistant giving you a nudge before you accidentally overseas on your food with salt. This could well be possible thanks to Ego4D, a project initiated by Facebook AI in collaboration with Facebook Reality Labs Research (FRL Research) and 13 partner institutes and labs from countries such as UK, Italy, India, Japan, Saudi Arabia, Singapore, and the United States. This November, they will unveil a mammoth and unique dataset comprising over 2,200 hours of first-person videos in the wild, of over 700 participants engaged in routine, everyday activities.

Egocentric Perception

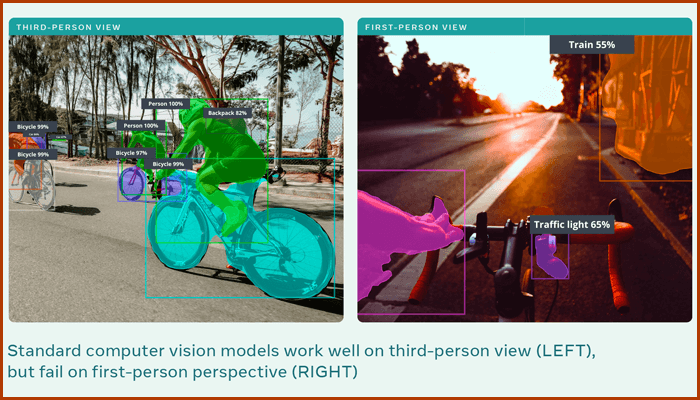

Computer vision is the process through which we try to equip machines with the same capabilities of visual detection and understanding that the human eye possesses. This is typically done via cameras that take photos and videos from a by-stander perspective. What makes the Ego4D project novel and next-generational is the manner in which data has been collected. To enable path-breaking research in immersive experiences, the dataset comprises video footage from a first person’s perspective, known among scientists as ‘egocentric perception’. According to Kristen Grauman, lead research scientist at Facebook AI, these are “videos that show the world from the center of the action, rather than the sidelines.” The footage has been collected via head-mounted devices combined with other egocentric sensors. Essentially, the setup seeks to track the wearer’s gaze and capture interactions with the world providing a richer set of images and videos. By recognizing the location, scene of activity and social relationships, these sort of devices could be trained to not only automatically understand what the wearer is looking at, attending to, or even manipulating, but also the context of the social situation itself. A sophisticated machine-based discernment like this could enhance everyday activities in workplaces, sports, education and entertainment.

IIITH’s Role

Acknowledging the diversity and cultural differences that exist globally, the dataset has a wide variety of scenes, people and activities. By doing so, it increases the applicability of models trained on it for people across backgrounds, geographies, ethnicities, occupations, and ages. Following the well-established protocols, IIITH, as the only Indian university participant, collected data from over 130 participants spread across 25 locations in the country. “Initially, we wanted to have a team that could travel across the country and participate in data collection. But with the pandemic, we had to find multiple local teams and ship cameras as well as data. We had to train people over videos.” explains Prof. C.V. Jawahar, Center for Visual Information Technology at IIITH. At each location, participants spanned a gamut of vocations and activities from home cooks, to carpenters, painters, electricians and farmers. “This is not a scripted activity carried out by graduate students. Video footage has been taken as each individual went about his or her daily tasks in a normal setting,” says Prof. Jawahar.

Applications

Terming it as a “turning point in the way AI algorithms can be trained to directly perceive, think and react from the first-person point of view”, Prof. Jawahar enumerates a few real-life scenarios where it could be applied. While computer vision has always had the potential for assistive technologies that improve the quality of life, this dataset could help push the envelope even further. “A wearable device with first-person vision can help someone who may have some visual impairment,” says Prof. Jawahar. A similar sort of ‘assistance’ can help in reinforcing memory especially for those exhibiting early signs of dementia or memory disorders. In education too, an immersive assistive device can take the learning experience to a whole new level. “The first-person view is especially important in training where an instructor may not have the same perspective as you. For instance, while cooking, the technology can prod you in the right direction. if you miss a step, it can remind you. If you’re doing well, it can encourage you and pat you on your back. Even while conducting surgeries, it can guide and provide additional cues to the surgeon wearing the device,” remarks Prof. Jawahar on the prospects of the first person vision technologies.

Reimagining Life, Work and Play

While data collection is one side of technology enablement, defining benchmarks or tasks to check the worthiness of the system is another. Ego4D has five benchmarks laid out:

Episodic memory or what happened when: For instance, If you have misplaced your keys, you could ask your AI assistant to retrace your day in order to locate the missing keys. “Where did I last see/use them?”

Forecasting: What you are likely to do next. Here, the AI can understand what you are doing, anticipate your next move and helpfully guide you, like stopping you before you reach out to put more salt in your dish while cooking.

Hand and object manipulation: What you are doing and how. When AI learns how hands interact with objects, it can intelligently instruct and coach in learning new activities such as the appropriate way to hold and use chopsticks.

Audio-visual diarization: Who said what when. If you stepped out of a meeting for a moment, you could ask the assistant what the team lead announced after you were gone.

Social interaction: Who is interacting with whom. A socially intelligent AI system can help you focus on and better hear the person who is talking to you in a noisy restaurant.

While these benchmarks could help in the development of AI assistants for real world interactions, Facebook AI also envisions its applications in a futuristic like scenario – a ‘metaverse’, where physical reality, AR, and VR converge.

Personalization And Other Novelties

For starters, the dataset and the benchmarks trained on it will help AI understand the world around it better. But Grauman says that it could one day be personalized at an individual level. “It could know your favorite coffee mug or guide your itinerary for your next family trip. And we’re actively working on assistant-inspired research prototypes that could do just that.”

For Prof. Jawahar, the future is rife with possibilities of futuristic applications this will bring out. “With the release of the dataset, we will soon run grand challenges and this is going to open up interesting opportunities for research on fundamental algorithms. The type of benchmarks that we brought here today in terms of episodic memory or hand-and-object interaction are all very novel. We are sure there will be newer applications arising out of this,” he says.

According to Prof P J Narayanan, Director, IIIT Hyderabad, “Computer vision grew by leaps and bounds after large datasets challenged the researchers starting mid 2000s. The big growth story of Deep Learning and AI in general is linked closely to the growth of computer vision through deep learning techniques. As a Computer Vision researcher, I am confident that the Ego4D dataset will take Computer Vision higher by another couple of notches. IIIT Hyderabad and the IHub-Data that we host are eager participants in creating foundational resources like datasets for various purposes. I look forward to the years ahead with Ego4D”.

At the 2021 International Conference on Computer Vision, a full-day workshop on Egocentric Perception, Interaction and Computing was scheduled on 17 October 17 where researchers were invited to learn more, and share feedback on Ego4D.

More details at https://bit.ly/3aOMqdQ

Next post