IIITH researchers in collaboration with CNRS prove theoretical guarantees on proportionality to ensure fairness in decision-making processes such as hiring in academia, granting bail and so on.

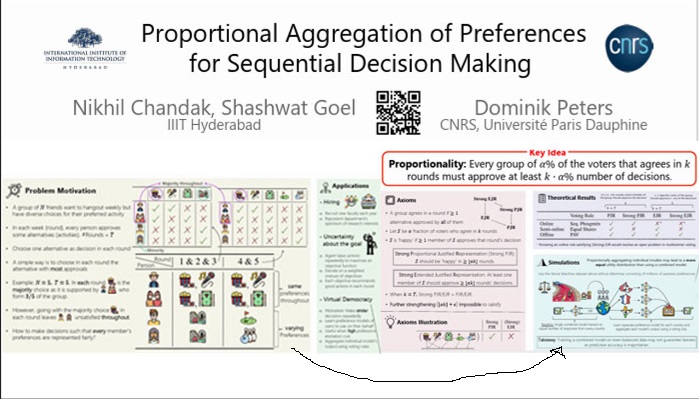

If the backlash that Gemini AI faced over its obvious biases is any indication, then the spotlight is on ethical AI. A major approach of ethical AI design is social choice theory. It simply means designing AI such that it represents the combined views of society at large. However, traditionally AI is trained by maximising accuracy on the combined data set of preferences. “This is similar to the majoritarian voting rule where every single decision you make lies in just picking the majority outcome,” observes Shashwat Goel, one of the authors of the research paper titled, ‘Proportional Aggregation of Preferences for Sequential Decision Making’. As per him and the other authors, Nikhil Chandak (IIITH) and Dominik Peters of CNRS, Paris, a more impartial alternative is to train different models for different groups of people and then use rules of proportional aggregation to make decisions in a fair way.

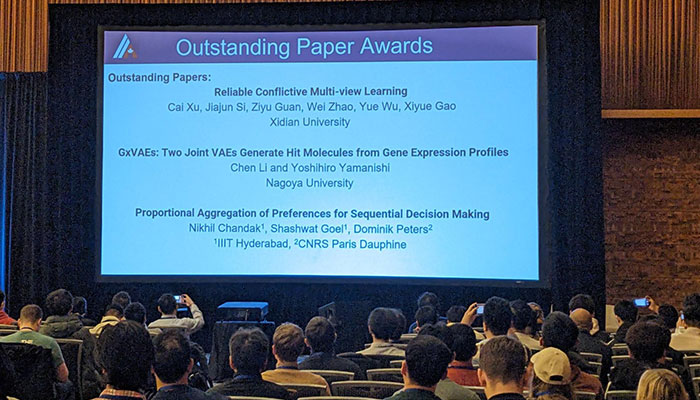

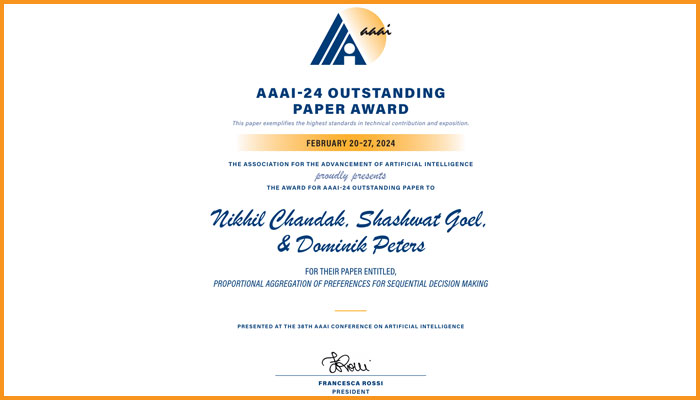

Outstanding Paper Award At AAAI ‘24

The researchers’ paper which elucidated these proportional aggregation rules was not only presented at the recently concluded 38th Annual AAAI Conference on Artificial Intelligence held in Vancouver, Canada but also won the Outstanding Paper Award there, awarded to the top 3 papers out of 12,000+ submissions. According to the team, with different groups of people having conflicting preferences, taking into account only the majority preference every single time is a gross misrepresentation of societal views. Explaining this simplistically, Shashwat gives an analogy of a group of friends who casts their vote every weekend to decide on an outing. “If 3 friends want to watch a movie and 2 want to hang out at the mall, proportionality means among 10 outings, 4 should be to the mall and 6 to the movies. Ideally, we don’t want the decisions to repeatedly favour the majority. We want a proportional representation of different groups of people,” he says.

Experiments

To test their rules for guarantees of fairness, the researchers conducted experiments using the moral machine dataset – a dataset containing several million ethical judgements about how a self-driving car should work in a fictional ethical dilemma (whether to sacrifice one versus saving a larger number). “We showed that if you learn a separate model for each ‘country’ (represented as a voter), and you proportionally aggregate these rules or algorithms, you get much better fairness metrics at a very marginal loss in overall utility of the population,” remarks Shashwat. The other experiment they conducted was on the Californian election data. “Unlike the election data where individual voter preferences were fairly similar and there was less conflict, we found the moral machine setting to be more interesting because we saw that when we pick individuals with conflicting preferences, using social choice theory to aggregate AI models fares much better than learning a single AI model,” he reasons.

Real World Applications

The team’s approach has immediate applications in a virtual democracy setting where decisions are automated based on models of the preferences of individual people, in making policy decisions of coalition governments, legal predictions and even in fair faculty hiring processes. In the latter, typically everyone in a department has very specific research interests and wants someone with similar interests to join for collaborative purposes. If the rules the researchers proposed are applied, then in every round of hiring, one can take into account those whose preferences were honoured so that eventually everyone can have a say in the hiring process.

Unforeseen Breakthrough

Terming their foray into social choice theory an unusual one for both the IIITH researchers, Shashwat Goel elaborates that his thesis at IIITH deals with Machine Unlearning which he’s pursuing under Prof. Ponnurangam Kumaraguru (PK). “It essentially deals with how to remove data from a training model that you have found to be problematic, in the sense of being biased or violating privacy or something,” he explains. Nikhil Chandak, on the other hand is working on robotic navigation under Prof. Kamal Karlapalem. It was at the end of their second year CSD (BTech in Computer Science and Engineering and Master of Science in Computer Science and Engineering) programme that they enrolled for an online workshop on Social Choice and Game Theory organised by the City University of Hong Kong where they got hooked to this field of inquiry. To delve deeper, they reached out to other labs and professors working in this area when they received an invitation from the Paris Dauphine University to work under Prof. Jérôme Lang at the National Centre for Scientific Research (CNRS), Paris.

“Our research paper deals with approval voting in a binary scenario – either voters approve some candidates or they don’t. But when we started our internship, we began with a much harder setting where people have arbitrary utilities for different alternatives like one alternative with 7 utilities or 6 utilities and so on, which made it difficult for us to progress. We realised that no one has actually studied this in the simpler setting either, that of approving or disapproving candidates. When we made the shift to the simpler scenario, we made quick progress,” he mentions. The Outstanding Paper award was a pleasant surprise for the researchers who were not anticipating such a win. “Our research will matter when people start deploying AI for decision making where the emphasis will be on ethical decisions. There are a lot of scenarios where people want to apply AI but the fairness aspect is something that holds them back,” he says, musing that their work is a step forward in that direction.

For more information, click here

Next post